What is R?

R is a free, open statistical programming language and environment. Released as open source software as part of a research project in 1995, for some time R was the preserve of academics. From 2010s onwards, with the advent of Big Data and new sub-disciplines such as Data Science, R enjoyed rapid growth and is used increasingly outside of academia, by organisations such as Google [example], Facebook [example], Twitter [example], New York Times [example] and many more.

Rather than simply a free alternative to proprietary statistical analysis software such as SPSS, R is a programming language in and of itself and can be used for:

-

Data Visualization design: PopulationLines

-

Developing interactive (visualization) software: UpSet

-

As a GIS: Geocomp with R

-

As a word processor / web publisher: R Markdown

Why R?

Free, open-source with an active community

The cost motive is obvious, but the benefits that come from being fully open-source, with a critical mass of users are many.

Firstly, there is a burgeoning array of online forums, tutorials and code examples through which to learn R. StackOverflow is a particularly useful resource for answering more individual questions.

Second, with such a large community, there are numerous expert R users who themselves contribute by developing libraries or packages that extend its use.

|

An R package is a bundle of code, data and documentation, usually hosted centrally on the CRAN (Comprehensive R Archive Network). A particularly important, though very recent, set of packages is the tidyverse: a set of libraries authored mainly by Hadley Wickham, which share a common underlying philosophy, syntax and documentation. |

R supports modern data analysis workflows

Reproducible research is the idea that data analyses, and more generally, scientific claims, are published with their data and software code so that others may verify the findings and build upon them.

Jeff Leek and Brian Caffo

In recent years there has been much introspection around how science works — around how statistical claims are made from reasoning over evidence. This came on the back of, amongst other things, a high profile paper published in Science, which found that of 100 recent peer-reviewed psychology experiments, the findings of only 39 could be replicated. The upshot is that researchers must now make every possible effort to make their work transparent. In this setting, traditional data analysis software that support point-and-click interaction is unhelpful; it would be tedious to make notes describing all interactions with, for example, SPSS. As a declarative programming language, however, it is very easy to provide such a provenance trail for your workflows in R since this necessarily exists in your analysis scripts.

Concerns around the reproducibility crisis are not simply a function of transparency in methodology and research design. Rather, they relate to a culture and incentive structure whereby scientific claims are conferred with authority when reported within the (slightly backwards) logic of Null Hypothesis Significance Testing (NHST) and p-values. This isn’t a statistics session, but for an accessible read on the phenomenon of p-hacking (with interactive graphic) see this article from the excellent FiveThirtyEight website. Again, the upshot of all this introspection is a rethinking of the way in which Statistics is taught in schools and universities, with greater emphasis on understanding through computational approaches rather than traditional equations, formulas and probability tables. Where does R fit within this? Simply put: R is far better placed than traditional software tools and point-and-click paradigms for supporting computational approaches to statistics — with a set of methods and libraries for performing simulations and permutation-based tests.

Get familiar with the R and RStudio environment

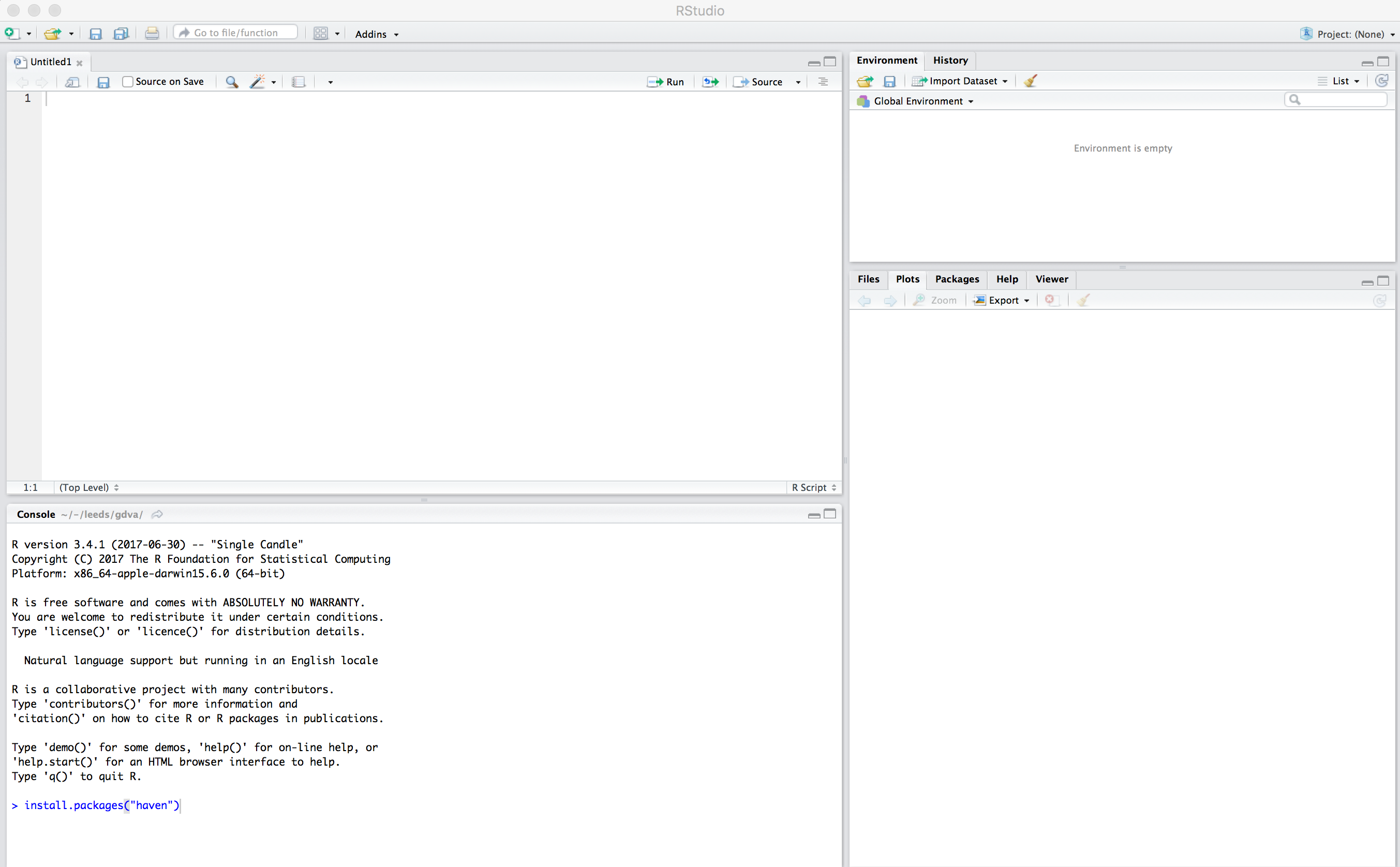

Open RStudio

You should see a set of windows roughly similar to those in the figure above. The top left pane is the Code Editor. This is where you’ll write, organise and comment R code for execution. Code snippets can be executed using Run at the top of the RStudio pane or typing cmd R (Mac) ctr R (Windows). Below this, in the bottom left pane is the R Console, in which you write and execute commands directly. To the top right is a pane with the tabs Environment and History. The purpose of these will soon be clear. In the bottom right is a pane for navigating through project directories (Files), displaying Plots, details of installed and loaded Packages and documentation on the functions and packages you’ll use (Help).

Type some console commands

You’ll initially use R as a calculator by typing commands directly into the Console. You’ll create a variable (x) and assign it a value using the assignment operator (←), then perform some simple statistical calculations using functions that are held within the (base) package.

# Create variable and assign a value.

x <- 4

# Perform some calculations using R as a calculator.

x_2 <- x^2

# Perform some calculations using functions that form baseR.

x_root <- sqrt(x_2)|

The |

Further reading

There is a burgeoning set of books, tutorials and blogs introducing R as an environment for applied data analysis. Detailed below are resources that are particularly relevant to the material and discussion introduced in this session.

R for data analysis

-

Wickham, H. & Grolemund, G. (2017), R for Data Science, O’Reilly. The primer for doing data analysis with R. Hadley presents his thesis of the data science workflow and illustrates how R and packages that form the Tidyverse support this. It is both accessible and coherent and is highly recommended.

-

Lovelace, R., Nowosad, J. & Muenchow, J. (in preparation) Geocomputation with R, CRC Press. Currently under development but written by Robin Lovelace (University of Leeds) and others, this book comprehensively introduces spatial data handling in R. It is a great complement to R for Data Science in that it draws on brand new libraries that support Tidyverse-style operations on spatial data.

New Statistics and the reproducibility crisis (non-essential)

-

Aschwanden, C. & King, R. (2015) Science Isn’t Broken: It’s just a hell of a lot harder than we give it credit for. A lovely take on the reproducibility crisis, published on FiveThirtyEight with an excellent interactive graphic.

-

Cumming, G. (2013) The New Statistics: Why and How. Geoff Cumming exposes common misconceptions associated with NHST and makes a case for a New Statistics, centred on estimation-based approaches. Despite the pre-historic graphics, his dance of the p-values video is well worth a watch.

Content by Roger Beecham | 2018 | Licensed under Creative Commons BY 4.0.